Empowering Government Agencies through Responsible and Explainable AI Adoption

The Perception and Definition of AI

Is AI the big bad wolf we fear or the long-awaited tech breakthrough? AI's quick introduction into our everyday reality is undeniable. Its rapid evolution has cemented its presence. Despite the ominous narratives portrayed - as the evil Terminator or Skynet - our reality is far from those extremes. Yet, we face immediate AI challenges such as the spread of deep fakes, hallucinations, and misinformation, which could potentially be amplified by AI. Hence, the need for robust Responsible AI frameworks becomes paramount.

As per the Health and Human Services (HHS) AI Playbook, to truly determine if a particular solution/system qualifies as AI, we need to assess whether it:

- Performs tasks autonomously in various scenarios, learning from data and experience.

- Employs tech to mimic human-like skills.

- Exhibits human-like cognitive traits.

- Uses methodologies, like machine learning for cognitive tasks.

- Achieves goals rationally using perception, planning, reasoning, decision-making, etc.

Growing AI Landscape, yet Underutilized in Government

Forbes projects a staggering 37.3% annual growth in the global AI market, expecting it to soar to more than $1.8 trillion by 2030, with North America's GDP set to rise by 14.5%. However, an O’Reilly report highlights a gap in the government sector, where only 9% have active AI projects. While nearly half are exploring AI's potential, a significant portion isn't utilizing it, partly due to outdated IT systems and concerns about misuse. The government's transition to actual AI projects remains sluggish despite substantial interest.

AI’s Unique Role in Government

President Biden’s recent Executive Order marks a significant step in how our government handles AI. It focuses on responsible AI use, sets safety standards, protects privacy, and upholds fairness and civil rights. Plus, it pushes for innovation, competition, and global teamwork while making AI smoother for government work.

AI isn't new. Statistical models have been in use since the 1950s. Private corporations and social media companies have employed data and algorithms for decades to automate and influence people's decisions. In AI's evolution, we have transitioned from manned contact centers to chatbots and advanced generative AI, promising improved government-citizen interactions.

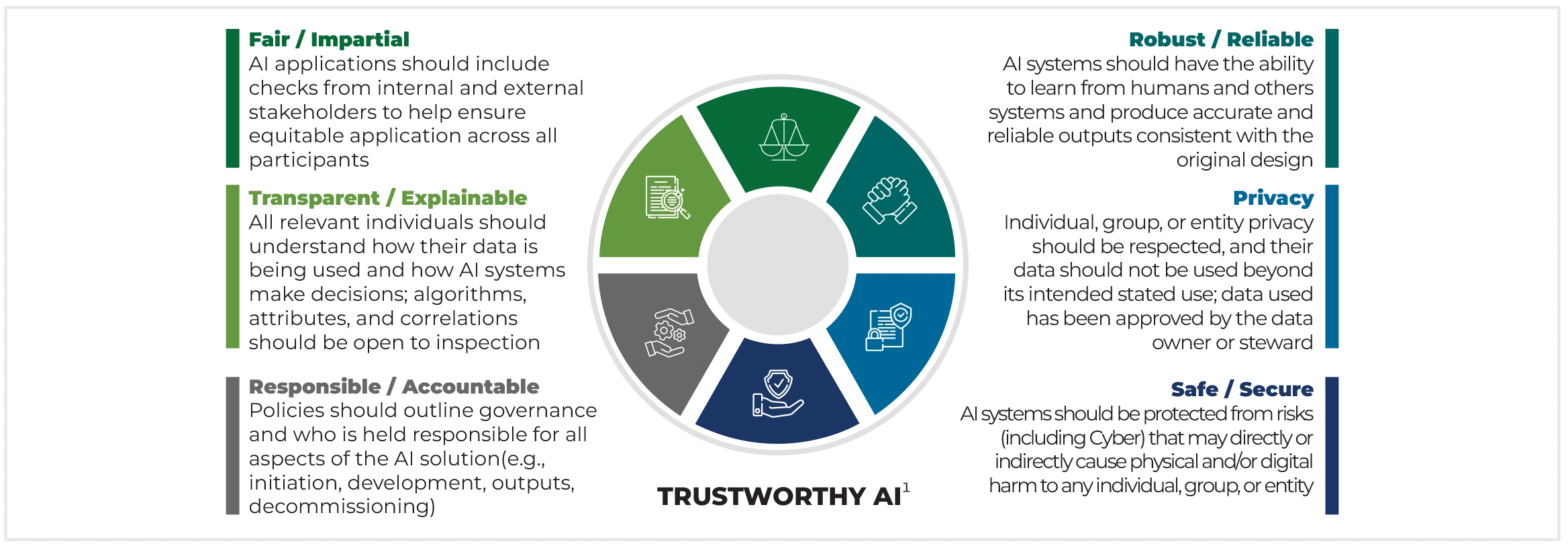

Unlike commercial applications, AI in Government goes beyond optimization and prioritizes trust, privacy, equity, and regulatory compliance. Upholding public trust and confidence while protecting privacy, promoting equity, and adhering to applicable regulations is crucial. Agencies should consider a Responsible AI framework and continuous verification model to mitigate bias, privacy, and legal risks. This model ensures diverse, unbiased data selection, ongoing algorithm adjustments, and compliance monitoring, minimizing discrimination based on keywords or specified criteria. Involving stakeholders from different backgrounds makes sure it's fair. This approach ensures trust and reduces resistance to AI adoption in both government and private sector

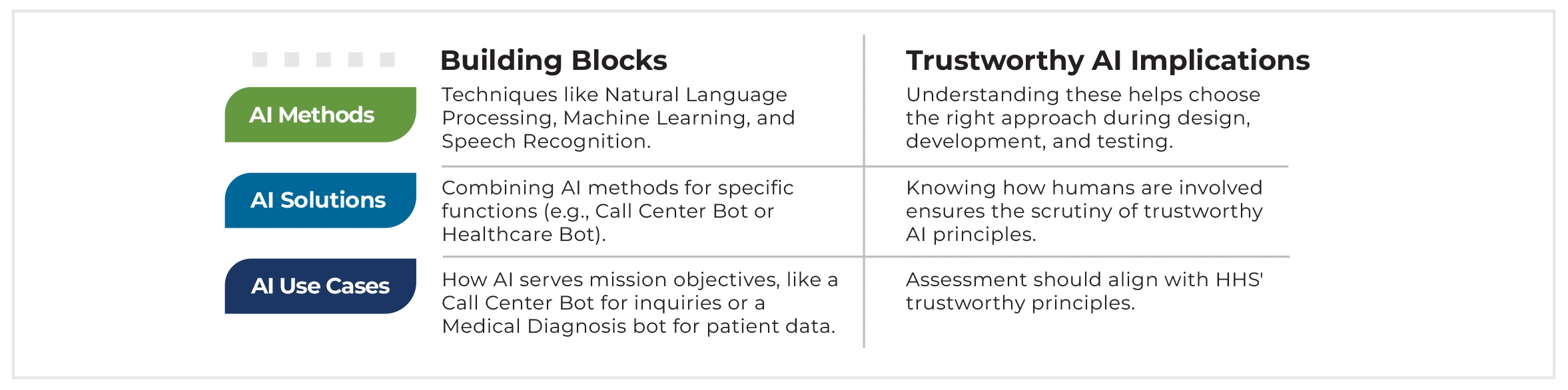

Responsible AI Building Blocks

For trustworthy AI in HHS, protocols for AI methods, solutions, and use cases are essential. The US DHHS’s AI Playbook provides the following perspective on it:

By consistently applying these principles, as outlined by the US DHHS, agencies can harness the full strategic and operational advantages of AI.

What Can Go Wrong

When it comes to AI, we have seen both positive and not-so-positive examples.

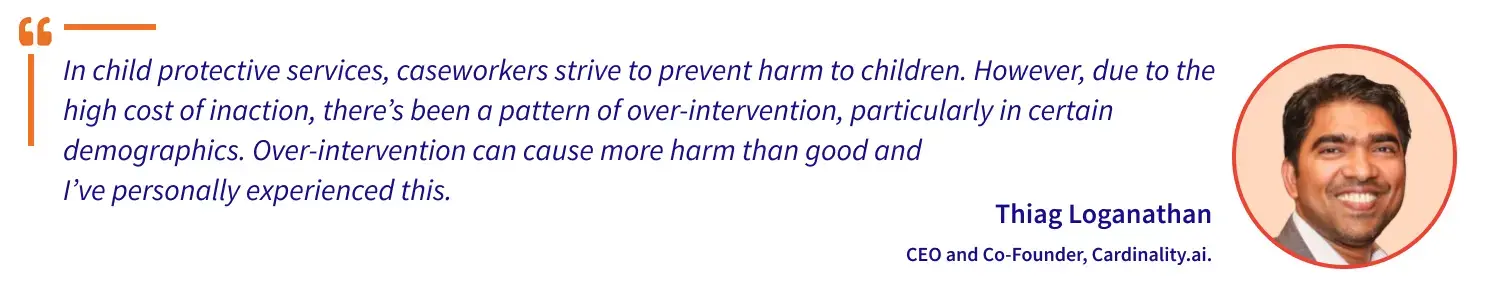

Consider this instance - even if a juvenile has a high chance of repeating offenses, it does not conclusively mean he/she cannot be rehabilitated and have a change in behavior/outcomes. Unlike AI, a caseworker can dig deeper into the contributing factors leading to delinquent behavior, including familial background and trauma. The caseworker considers personal circumstances, family support, and other critical factors the AI might miss. The caseworker's deep understanding enables decisions based on a person's situation, not just statistics or predictions.

The better approach is for AI to guide and suggest (nudge), not decide outright, to avoid negatively impacting a young person's path. By offering insights, not independent scores, AI assists human decision-makers, prioritizing and highlighting relevant data points rapidly for informed assessments.

When effectively implemented, AI can analyze data patterns to suggest pathways of action and recommendations. Not every reported case requires immediate intervention. By impartially scrutinizing reported incidents, AI can aid caseworkers in prioritizing interventions based on historical and current data points available. It refrains from generating a risk score, as scores might introduce bias into decisions, either by prompting action or inaction. This approach ensures that resources are optimally allocated to assist the most vulnerable children and families.

Training data sets distorted by such scenarios will introduce biases. To address this, it is crucial to not use AI models in use cases that may introduce biases. This makes AI fairer and more reliable in aiding workers without bias.

The core principle persists: AI should enable faster and better decision-making rather than making those decisions autonomously

Enabler, Not a Decision-Maker - Cardinality's Approach

Cardinality improves citizen service delivery through AI features and functionalities that are readily configurable, to meet a program’s specific needs. We aim to assist and augment decision-making, not replace it. We work within the guardrails and have made our AI explainable, so workers and citizens clearly understand why a certain recommendation is made.

AI as a decision enabler rather than a decision maker allows:

- Data Driven but Human Decision Making: Enables clear, accountable decision processes.

- Ethical & Explainable Use of AI: Ensures decisions align with ethical standards.

- Empowered Social Worker vs Unexplainable Automation: Improves worker efficacy and trust in the use of AI.

- Measured Human Intervention: Caseworker confidence in inaction, or light intervention.

Overcoming Adoption Challenges in HHS

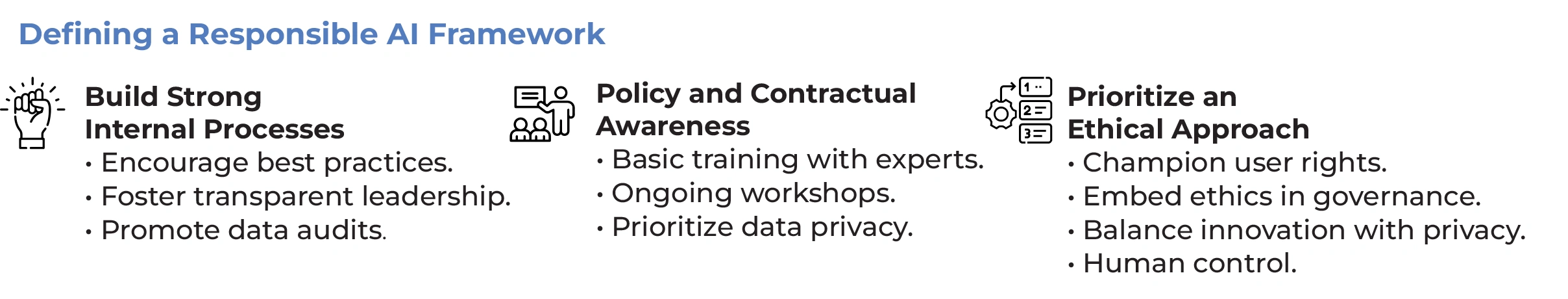

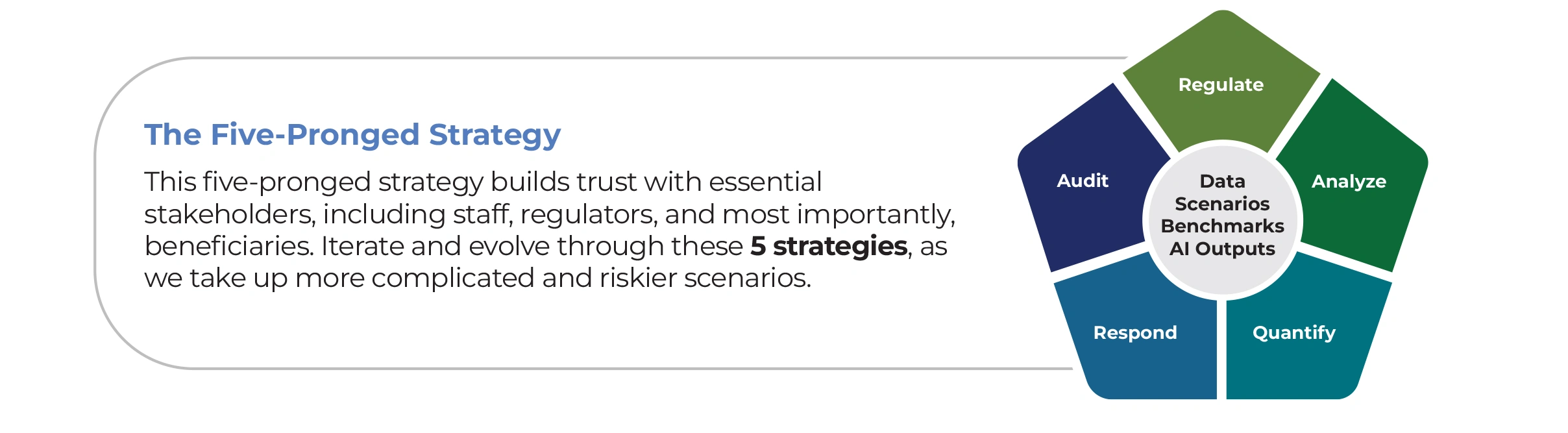

To improve AI adoption, users and regulators should have absolute confidence. This is where a robust, responsible AI framework like the one suggested below becomes essential.

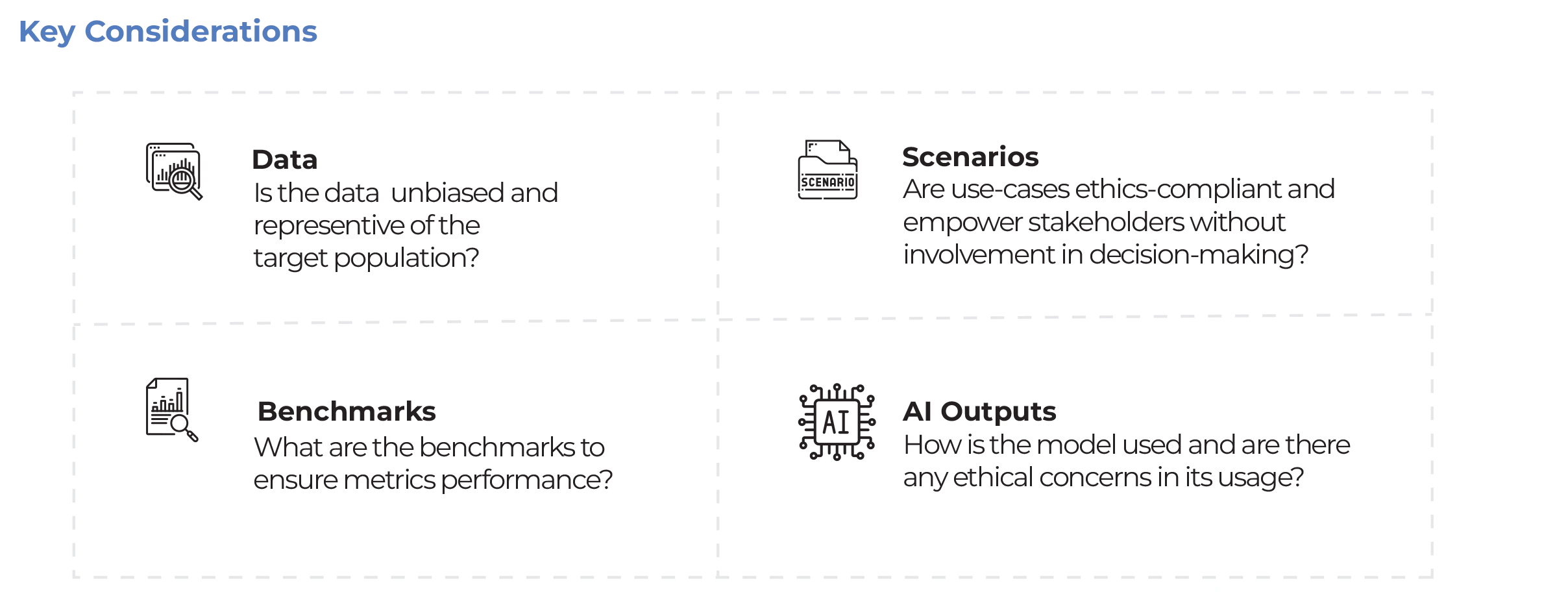

While AI can enhance outcomes in various HHS use cases, it's essential to consider key factors when implementing them.

Conclusion

AI has arrived faster than expected, and its presence is here to stay. While it’s constantly evolving, ignoring AI isn't an option. Instead, we must find ways to use it responsibly, with a Responsible AI framework in place.

This HHS report shows that 70% of business leaders back AI-driven government projects, 80% of agencies are progressing in digital maturity, and by 2024, 75% of governments will launch three enterprise-wide hyper-automation initiatives.

The potential AI uses are vast - optimizing resource allocation in social services, detecting and preventing financial fraud, aiding law enforcement in criminal case preparation, assessing policy impact through sentiment analysis, coordinating emergency responses, ensuring regulatory compliance, enhancing public safety insights, streamlining administrative tasks, monitoring public health trends, and engaging citizens through feedback analysis. While the stats and opportunities are promising, the future holds tremendous potential and it is up to us to navigate it responsibly and ethically.

About the authors

Thiag Loganathan is the CEO and Co-Founder of As CEO and Co-Founder of Cardinality.ai, Thiag Loganathan is focused on improving the experiences of government workers and U.S. constituents in health and human services agencies. Having previously used data extensively to drive consumer purchasing for Fortune 50 companies, Thiag now directs his attention toward government initiatives.

With a dedicated team, he is committed to pioneering AI and data solutions that deliver positive societal impact at scale. Thiag's entrepreneurial journey includes leading DMI’s Big Data Insights Division and establishing Kalvin Consulting in 2007, a Data Analytics solution provider and an SAP Partner, acquired by DMI in May 2013.

Wendy Wilson former CCWIS Project Director of Georgia DHS now serves as the Director of Programs for Cardinality.ai. She has 25+ years of working in child welfare, helping kids and families in different ways - supporting them at home and finding them new places to live when needed. She has worked in New York, Pennsylvania, and Georgia and has taught and helped other child welfare professionals. She holds extensive knowledge of SDLC and has overseen development and maintenance activities for a State’s CCWIS system.

Additionally, she has been involved with implementing a State’s new practice model, interfaces between the State’s CCWIS and several State Agencies (Department of Education, Department of Early Childcare and Learning, Department of Public Health, and Department of Community Health), and oversaw the development of a Data Lake for screening client history with six disparate systems. As a strategic leader, Wendy spearheads our projects, people, and technology practice and shares her deep knowledge about how child welfare works across the country.

Karrthik Shettyy the Director of Content Marketing at Cardinality.ai, brings expertise in content, marketing, sales, and communications. Over the past 15 years, Karrthik has supported numerous startups to Fortune 500 companies in their strategic content development and marketing efforts. His passion for writing and editing complements his love for technology and marketing.

He crafts compelling content across various sectors - B2B, B2C, and B2G - finding clarity and creativity in his work. Karrthik’s career trajectory has seen him don multiple hats - from a bootstrapped entrepreneur to a marketer to a numbers-driven sales director while maintaining his love for creative writing intact.